Sunday Bonus Edition: 15 days of using the Manus AI agent

Thank you for being a part of the journey. This is a special Sunday bonus edition of The Lindahl Letter publication. A new edition arrives every Friday. This Sunday the topic under consideration for The Lindahl Letter is, “15 days of using the Manus AI agent.”

BLOT: After using the Manus AI orchestration agent wrapper for 15 days, I found it to be a powerful orchestration tool with real potential, but it still has limits around task continuation, context window handling, and support for divergence in multi-step workflows. Manus offers strong replay and task execution features, but the pricing and credit system makes it difficult to support ongoing complex projects. My top five takeaways focus on the importance of continuation, recovering from context window limits, adding save points, aggregating replays, and supporting stacked or connected tasks.

15 days ago I started running tasks with the Manus AI agent and decided to share an initial bonus Substack post about my experience [1]. Knowledge workers are staring at the future. It will be here before you know it. Manus as an implementation is an example of the tip of the spear for these orchestration environments. Going forward a subset of people probably knowledge workers are going to specialize in working with these orchestrations of agents. You can already see companies like Manus selling pro plans to enable this type of service. This morning felt like the right point in my experience of working with an AI agent that handles orchestrated tasks to sit down and reflect on the entire process.

Continuation between tasks is something you almost entirely have to manage and be mindful about yourself by asking for the content to be stored as a zip file and writing a continuation prompt for the next task. You have to avoid contributing to throw away tasking. Initially I gave it prompts to read a paper I had written about a framework for reducing knowledge based on the MapReduce framework used by Google and was able to get some code back each day using my 1 daily task credit [2]. A lot of that initial task by prompt to create initial python code based on the paper as a prompt has been shared back on my GitHub under the various task Manus task folders [3]. Manus opens an Ubuntu instance for each task session and is using Anthropic's Claude 3.7 Sonnet model to power the actual work occurring within the orchestration. Interestingly enough they gave me a single task each day during my 15 days of usage and I tried to make the best use of that free experience.

Maybe the most interesting part about what the Manus team ended up doing was how they elected to share the playback and recording of each task effort with the end user and publicly [4]. Those replays are probably the foundational reason for them running this free experiment to gather a ton of examples of how the orchestration runs and works to see what hits the limit for the context window and what works during very long execution sessions that sometimes seemed to hit 5+ hours of todo style task list completion and iteration. I exclusively used the Manus agent to write code for either Python repos or .ipynb files that were geared to execute within the Google Colab environment. The repo with the 9th attempt results has a good example of a working and updated .ipynb file that follows the style and execution breakdown of my previous work [5]. It really was able to augment my work and that singularly was an interesting outcome to take a moment and consider.

You probably have the same sort of question developing in the background of your thoughts on this one like I did during my contemplation of this Manus AI agent doing orchestration as a wrapper on a model like Claude for execution. As the models become increasingly commoditized which is interesting as huge sunk costs in the billions were spent to create these foundational large language models and then they are getting distilled and otherwise trained at an order of magnitude less cost and time. Foundational models are now everywhere and you can still go back and read the 214 page paper that tons of people signed on to from Stanford talking about them in great detail [6]. The word cost occurs a little over 100 times in the paper however at the time it was understood that due to the actual computational cost and engineering most research teams or individual researchers could not do this type of work. Things change rapidly in the AI space and now in 2025 DeekSeek-AI and other groups have flipped the script on that assumption [7]. Training cost and model building went from the billions to the millions in terms of cost. All of those sunk costs from the initial foundational model training are now locked in and are not ever going to be recovered from go forward revenue. The entire maco model building game has changed and the initial foundational models needed to be built, but they will be like a reference architecture or point in time save point going forward.

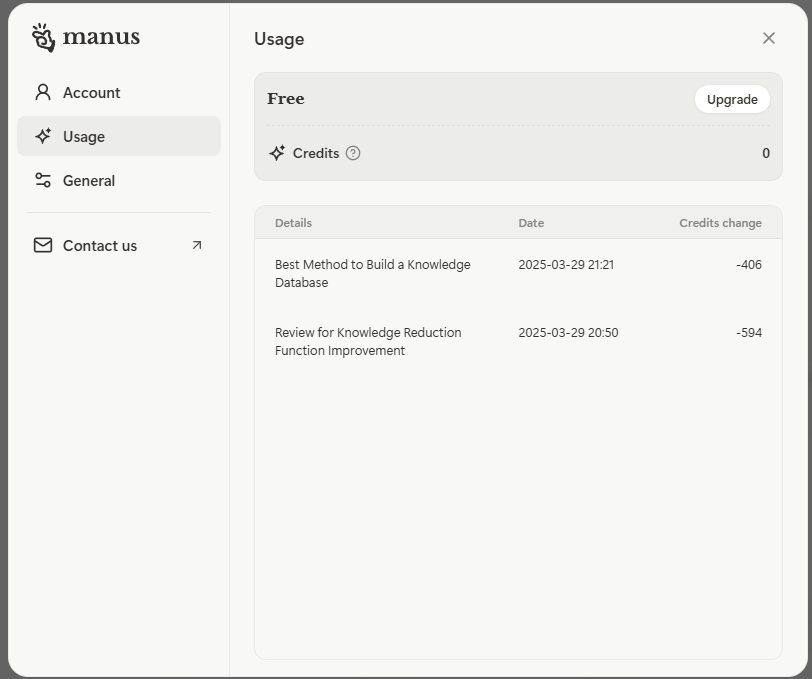

My 15-day deep dive into the Manus orchestration wrapper concluded when they moved from the beta launch to having actual plans. My account was moved to the free tier and they did grant me 1,000 credits to finish up whatever was currently in progress. Those credits were spent very quickly (within hours) with 406 going to some methods research and 594 being consumed by a now incompleted review for knowledge reduction function improvement .ipynb file.

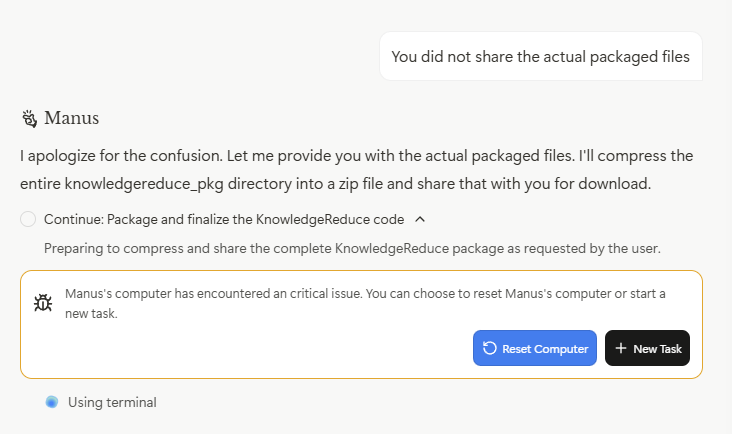

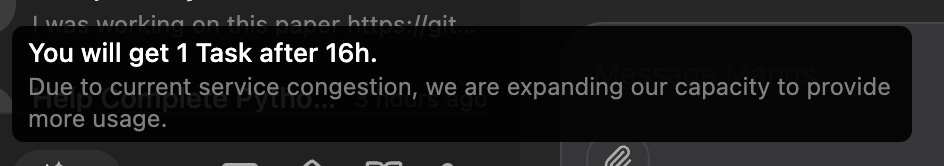

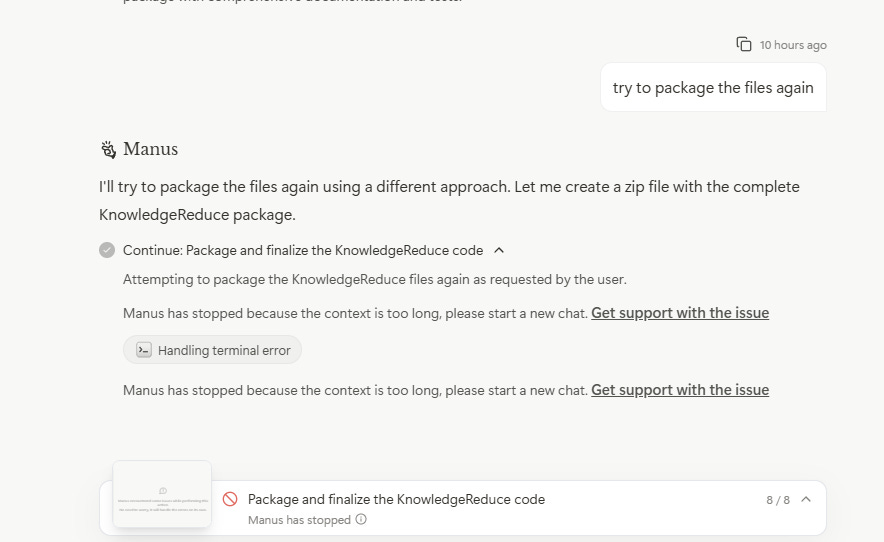

It was a good decision for the Manus team to put in some of these plan based gates for usage and I’m sure the cost of running this type of effort opening and allowing such lengthy agent orchestration was significant. Several times I was able to get either the “Manus has stopped because the context is too long, please start a new chat” error message or the “Manus’s computer has encountered an critical issue. You can choose to reset Manu’s computer or start a new task” error message. They experienced a huge amount of current service congestion and constantly were promising to be expanding capacity to provide more usage. I was able to run a task pretty much every day and sometimes did have to try several times within a 30 minutes window to get the task to finally execute. They actually seemed to have built in some queuing instead of just failing the task toward the end of my team with the product which was smart and showed that the product is being enhanced based on actual user experiences.

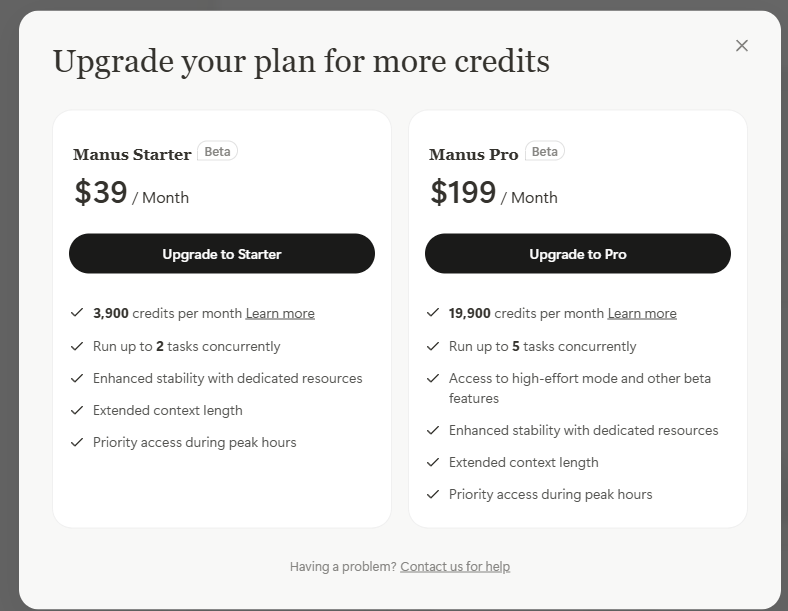

Right now the Manus team wants me to upgrade my plan to the starter beta for $39 a month or the pro beta plan for $199 which feature 3,900 credits or 19,900 credits respectively. I did not elect to spend money on either service. My 1,000 credits burned down so quickly that I would crush that 19,900 pro plan credit allocation within a day or two and potentially in a single day running 5 tasks concurrently. I will admit that access to the extended context window did make me consider kicking the tires for a month. They do have a page that explains how credits work on the company website [8]. Why does any of this matter in the context of knowledge works getting supplemental help and support from orchestrated agents? Well this is a service that is going to involve a lot of computation and support. Imagine the Silicon Valley unicorn OpenAI competing against a slew of Manus type agents charging a couple hundred dollars a month and Sam Altman showing up to the table with PhD-level research for about $20,000 per month [9].

What are my top 5 learnings from this experience?

Continuation - You have to be able to package up the efforts of a task to start another task. Continuation of a contact window will be huge going forward.

Context window recovery - Really deeply complex tasking stresses the context window because during a current task no method exists to compress or reduce the context window. It’s almost like a subroutine needs to exist to pause and run a tool to compress the context window throughout the process at a certain percentage of utilization.

Save points - Within these really long todo style executions it would be helpful to be able to go back to the replay and ask the model to start again at some save point and take a different direction.

Replay aggregation - It would be useful to have the model do play by play analysis of a bunch of replays to figure out how to progress if it got stuck or to work on highly complex research tasks that abridge a few hours of effort. Another way to say this would be sharing a task between team members or organizations to build on the previous work without having to totally reset the effort.

Task stacking or multi-orchestration support - Right now each orchestration of a task within the Manus environment is single serving and nothing allows the tasking of several related efforts to ultimately build a bigger and more complex repo for more adventures projects. Even having a regression test case orchestration or a deeper unit testing effort following along to clean and evaluate would start that type of multi-orchestration support.

Here are a few screenshots that I took for this missive, but did not include in the main content. Enjoy!

Things to consider this week:

What use cases in your daily knowledge work could benefit from AI-powered orchestration and automation?

Are current orchestration tools mature enough to support complex, multi-step use cases without manual task continuation?

How sustainable are credit-based pricing models for use cases that involve long or compute-intensive agent sessions?

What value could emerge from aggregating replay data across use cases to refine model performance or guide task execution?

How do emerging AI orchestration tools reflect the broader intersection of technology and modernity, and what does that mean for how we approach future use cases?

Footnotes:

[1] https://www.nelsx.com/p/using-the-manus-ai-agent-to-update

[2] Dean, J., & Ghemawat, S. (2008). MapReduce: simplified data processing on large clusters. Communications of the ACM, 51(1), 107-113. https://dl.acm.org/doi/pdf/10.1145/1327452.1327492

[3] https://github.com/nelslindahlx/KnowledgeReduce

[4] https://manus.im/share/y2t6uYJphJQPcrBYK0ufXZ?replay=1

[6] Bommasani, R., Hudson, D. A., Adeli, E., Altman, R., Arora, S., von Arx, S., ... & Liang, P. (2021). On the opportunities and risks of foundation models. arXiv preprint arXiv:2108.07258. https://crfm.stanford.edu/assets/report.pdf or https://arxiv.org/pdf/2108.07258

[7] Liu, A., Feng, B., Xue, B., Wang, B., Wu, B., Lu, C., ... & Piao, Y. (2024). Deepseek-v3 technical report. arXiv preprint arXiv:2412.19437. https://arxiv.org/pdf/2412.19437

[8] https://manus.im/help/credits

[9] https://techcrunch.com/2025/03/08/week-in-review-openai-could-charge-20k-a-month-for-an-ai-agent/

What’s next for The Lindahl Letter?

Week 188: How do we even catalog attention?

Week 189: How is model memory improving within chat?

Week 190: Quantum resistant encryption

Week 191: Knowledge abounds

Week 192: Open source repositories are going to change

If you enjoyed this content, then please take a moment and share it with a friend. If you are new to The Lindahl Letter, then please consider subscribing. Make sure to stay curious, stay informed, and enjoy the week ahead!